Best practices: user testing for apps to boost outcomes

Master user testing for apps with a practical, step-by-step guide: plan, recruit, analyze feedback, and deliver an app your users love.

When we talk about user testing for apps, we're really talking about watching real people use your app to see where they get stuck, confused, or delighted. It’s that crucial reality check that closes the gap between what your team thinks is a great experience and what your users actually feel.

This process is what makes an app not just work, but feel effortless and enjoyable.

Why User Testing Is Your App's Secret Weapon

Have you ever launched a feature you were absolutely convinced was a game-changer, only to watch it get completely ignored? It’s a painful but common experience. It all comes down to a simple truth: you are not your user. The things that seem "obvious" to you and your team are often the exact points where your users get tripped up.

This is where consistent user testing for apps saves the day. It helps you stop guessing and start making decisions based on real human behavior. Think of it less like a final exam before launch and more like an ongoing conversation with the people who will make or break your app.

This feedback loop is incredibly powerful.

- It prevents expensive fixes down the line. Finding a major flaw in a Figma prototype is a quick, cheap fix. Finding that same flaw after it's been coded, tested by QA, and pushed to the App Store? That’s a whole different, much more expensive story.

- It’s a massive driver of user retention. When users get frustrated, they don't patiently search for a tutorial or contact support. They just delete the app. A smooth, intuitive experience is one of your best defenses to reduce user churn and keep people coming back.

- It directly impacts your app store ratings. Happy users who can easily accomplish their goals are the ones who leave glowing reviews. Frustrated users? They're far more likely to leave a one-star review detailing their bad experience.

By actually watching people use your product, you uncover the why behind your analytics data. You can see the slight hesitation before they tap a button, hear the sigh of frustration when a screen doesn't load as expected, and pinpoint the exact moment of confusion. Raw data alone will never give you that.

Let’s be honest, the mobile app market is brutal. The entire testing market is on track to hit $13.6 billion by 2026, which tells you how seriously successful companies are taking this.

When you see stats suggesting that 94% of users uninstall apps within the first month, you realize that a fantastic first impression isn't just a nice-to-have. It’s a basic requirement for survival. For a deeper dive, check out these mobile app testing statistics.

Building Your User Testing Blueprint

Jumping into user testing for your app without a clear plan is a classic mistake. It's like starting a road trip without a map—sure, you'll see some interesting things, but you probably won't end up where you intended. A solid blueprint is what turns a vague goal like "see if the app is easy to use" into a focused strategy that actually delivers insights you can act on.

The first, most crucial step is to get specific. Forget asking if your e-commerce app is "user-friendly." Instead, frame it as a concrete task with a measurable outcome. For example, can a first-time user find a specific product, add it to their cart, and check out in under 90 seconds? That level of detail is what separates fuzzy feedback from hard, actionable data.

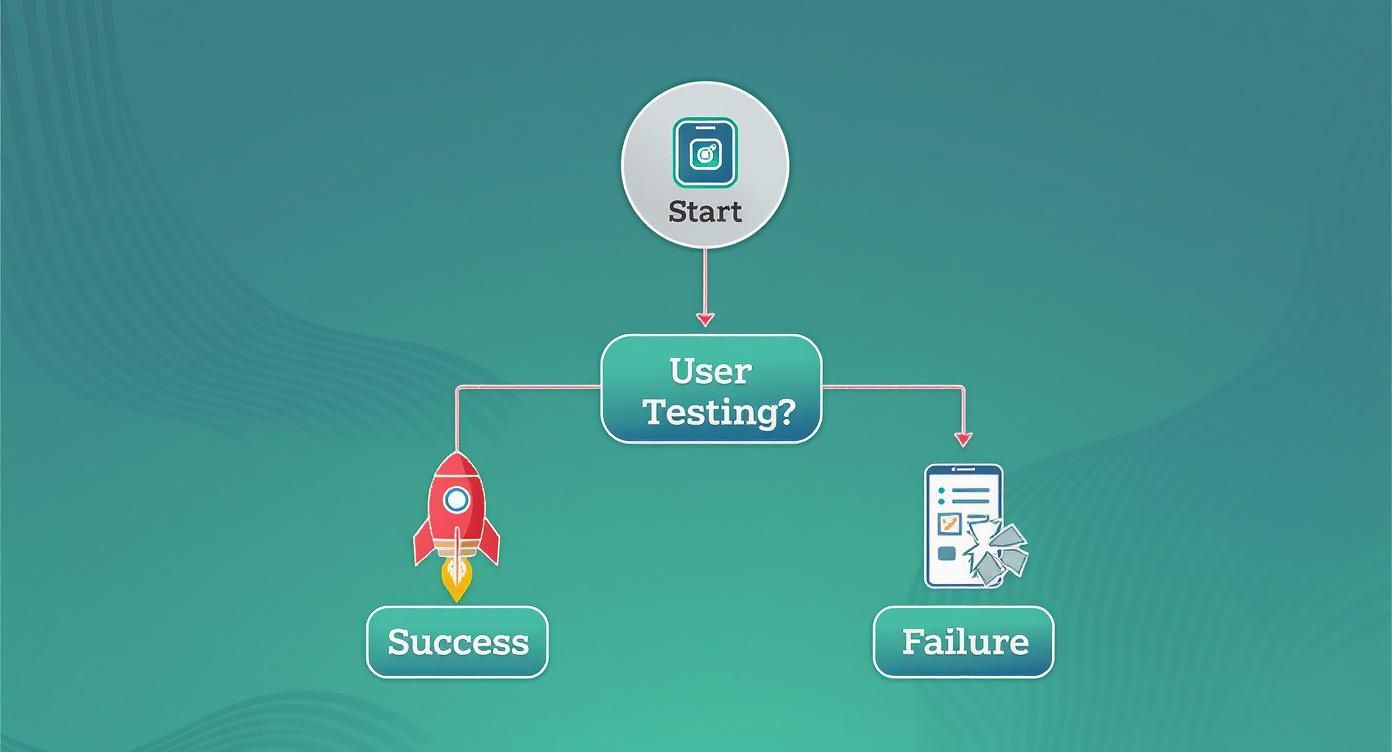

This visual decision tree really nails the core choice every app team faces.

As the infographic shows, it’s a clear fork in the road. Actively pursuing user testing puts you on the path to a successful app, while ignoring it almost always leads to a dead end.

Define Your Target User Personas

Next up: you have to know exactly who you're testing with. I've seen teams build an app for busy parents and then test it with college students—the feedback is worthless. Crafting simple, realistic user personas is the only way to ensure you recruit participants who actually represent your true audience.

These personas don't need to be 10-page biographies. Just stick to the essentials:

- Demographics: What's their age, location, and job?

- Goals: What are they actually trying to do with your app?

- Tech Savviness: Are they early adopters who love new gadgets, or do they get nervous with unfamiliar technology?

For a fitness app, a persona might be "Alex, a 34-year-old marketing manager who wants to squeeze in three 30-minute workouts a week but is always short on time." That simple profile instantly tells you who to look for.

Crafting Realistic Test Scenarios

Once you have your goals and personas locked in, it's time to build scenarios that reflect how people would use your app in the real world. A good scenario gives the user context and a goal, but never tells them the steps.

Don't say, "Tap the profile icon, then go to settings." Instead, try something like, "You just moved and need to update your shipping address. How would you do that?"

The best scenarios feel like real-life situations. This encourages natural behavior from your participants, giving you authentic feedback on your app's flow and discoverability rather than just testing their ability to follow instructions.

Choosing the right testing method is the final piece of your blueprint. The best approach really depends on your specific goals, timeline, and budget. To help you pick the right tool for the job, here's a quick comparison of the most common methods I've used.

Choosing the Right User Testing Method

A comparison of common app user testing methods, outlining their best use cases, pros, and cons to help you select the most effective approach for your project.

| Method | Best For | Pros | Cons |

|---|---|---|---|

| Moderated Remote | Deep dives into complex workflows and understanding user motivations. | Lets you ask follow-up questions; builds rapport with users. | Time-intensive; requires a skilled moderator to get good results. |

| Unmoderated Remote | Getting quick validation on specific tasks and user flows at scale. | Fast and cost-effective; gathers feedback around the clock. | No chance to ask "why"; you risk getting unclear feedback. |

| Guerrilla Testing | Getting fast, high-level feedback on early concepts and prototypes. | Super cheap and quick; provides raw, immediate reactions. | Participants may not be your target audience; not for in-depth analysis. |

Ultimately, there's no single "best" method. The key is to match the technique to your immediate needs, whether that's digging deep into user psychology with a moderated session or getting a quick gut check with some guerrilla testing.

Finding the Right People for Your App Test

The insights you get from user testing for apps are only as good as the people you test with. If you recruit participants who don't fit your target audience, you’ll end up chasing solutions for problems your real users don't actually have. This isn't about filling seats; it's about finding the right people who can give you genuine, relevant feedback.

Your own audience is almost always the best place to start. Think about your email list, social media followers, or even your CRM database. These are people who have already bought into your vision in some way, making them more motivated and likely to provide thoughtful, high-quality feedback.

But what if you need a completely fresh set of eyes, or you're trying to reach a specific demographic you haven't tapped into yet? That’s when dedicated recruiting platforms become incredibly useful. Services like UserTesting, Userlytics, or TryMata give you access to huge panels of testers you can filter by all sorts of specific criteria.

Crafting a Smart Screener Survey

Think of a screener survey as your bouncer. It's a short, carefully designed questionnaire that filters out anyone who isn’t a good fit for your test. The trick is to ask questions that reveal behaviors and mindsets without telegraphing the "right" answers.

Let's say you're testing a new budgeting app built specifically for freelancers. A bad screener question would be, "Are you a freelancer who needs help with budgeting?" It's too leading.

Instead, you'd want to ask behavioral questions that get you the same information indirectly:

- How do you primarily earn your income? (Options: Full-time employment, Freelance/Contract work, Part-time, etc.)

- Which of these tools do you currently use to manage your finances? (Options: A spreadsheet, A dedicated app, Pen and paper, I don't really track them, etc.)

This approach helps you find your ideal participants without letting them game the system just to get picked.

A well-crafted screener is your first line of defense against poor-quality data. It ensures the time you spend conducting tests is with people whose feedback will genuinely help improve your app.

Getting Compensation and Consent Right

Finding the right people is only half the battle; you also have to treat them with respect. That starts with fair compensation. You're asking for their time and focused effort, and your incentive should reflect that.

For a standard 30-60 minute remote testing session with a general audience, offering between $20 and $60 is a pretty common range. If you need someone with highly specialized knowledge, like a doctor or a senior software engineer, you should expect that rate to go up significantly.

Before you hit record, always get informed consent. This isn't just a formality. Give them a clear, easy-to-understand document that explains what the test will cover, how their data will be handled, and that they have the right to stop at any point. It’s all about building trust and being transparent from the get-go.

Finding good testers has a lot in common with finding new customers—both require a solid plan. For more ideas on reaching the right people, our guide on a strong user acquisition strategy for your app has some great tactics that can help.

Running an Insightful Testing Session

This is where the rubber meets the road. All your planning and recruiting efforts culminate in this moment: the actual testing session. Whether you're running it in-person or remotely, your main job is to create an atmosphere where your participant feels comfortable enough to think out loud.

For any moderated test, think of yourself less as an examiner and more as a curious co-pilot. Start by setting a relaxed tone. I always take a few minutes to chat, break the ice, and reassure them that we're testing the app—not them. It's amazing how much more open people become when you explicitly state there are no right or wrong answers.

That simple introduction does wonders. It helps people let their guard down, which means you get to see their real, unfiltered behavior instead of what they think you want to see.

Guiding the Conversation in Moderated Tests

As a moderator, your best friend is the open-ended question. You have to fight the natural urge to lead the witness. Instead of asking something specific like, "Did you find that button confusing?" which puts them on the spot, try a much broader prompt like, "What are your thoughts on this screen?"

Here are a few of my go-to phrases that always seem to get people talking:

- "Looking at this for the first time, what's jumping out at you?"

- "Walk me through what's going through your mind right now."

- "If you were to tap on that, what would you expect to happen?"

- "Was that what you expected? Tell me a bit more about that."

So, what happens when a user gets totally stuck? It’s tempting to jump in and save them, but don't. At least, not right away. That moment of struggle is pure gold—it’s where you uncover the most critical friction points in your app. Give them a beat, then maybe ask, "If you were on your own, what might you try next?"

These moments are so important because users have almost zero patience for clunky apps. In fact, around 88% of users will simply abandon an app if it's buggy or performs poorly. Every hiccup you witness is a chance to fix something that could cost you users down the line. You can read more about mobile app crash rates to get a sense of just how high the stakes are.

Setting Up Successful Unmoderated Tests

When you're not in the room to clarify things, your instructions have to be absolutely bulletproof. For unmoderated tests, clarity is everything. One ambiguous task can completely tank a session and render the data useless.

My number one tip here? Run a quick pilot test on a coworker. If someone on your own team gets confused by the instructions, you can bet your real participants will, too.

Tools like UserTesting are built for this, giving you a structured way to lay out tasks and capture feedback remotely.

A dashboard like this one is invaluable for keeping track of your tests and watching the feedback roll in. It keeps everything organized, which is a lifesaver when you're analyzing the results. And if you're deep in the Apple ecosystem, our guide on how to run effective iOS app testing has some platform-specific advice you might find useful.

The most profound insights often come not from the answers users give, but from the silence and hesitation before they act. In both moderated and unmoderated tests, pay close attention to pauses and non-verbal cues. Active listening and observation will reveal the usability issues that users can't always articulate.

Turning User Feedback Into Actionable Improvements

You've wrapped up your user tests, and now you're staring at a pile of raw data—session recordings, frantic notes, survey answers, and task times. It's easy to feel overwhelmed at this stage, but this is exactly where the gold is. The whole point of user testing for apps isn't just about collecting opinions; it’s about turning a jumble of observations into a clear, strategic plan for making your app better.

First things first, you've got to get organized. You need a way to wrangle both the qualitative stuff (what people said and did) and the quantitative metrics (how long things took, success rates). A simple spreadsheet or a tool like Airtable can be a lifesaver. I like to set up columns for the user, the task they were doing, direct quotes that stood out, behaviors I noticed, and any hard numbers like time on task. Getting it all in one place is the only way to start seeing the bigger picture.

Remember, this isn't just about spotting bugs. It's about getting inside your user's head and understanding their experience.

Find Patterns with Affinity Mapping

One of my go-to methods for making sense of all the qualitative feedback is affinity mapping. It sounds fancy, but it's really just a smart way to group your notes. Take every single observation, user quote, or pain point and pop it onto its own sticky note—you can use a physical wall or a digital tool like Miro.

Once everything is laid out, just start dragging similar notes together. You'll quickly see themes start to form all on their own. For example, you might have a few notes like, "couldn't find the 'save' button," "was confused after login," and "didn't know how to edit my profile." Those would naturally cluster into a group you could call something like "Navigation & Discoverability Issues."

This simple exercise is fantastic for moving past one-off complaints and seeing the systemic problems that are tripping up multiple users. It brings order to the chaos and makes the biggest friction points jump right out at you.

Prioritize Your Fixes with a Framework

Okay, so you've identified the key themes. Now what? You can't fix everything at once, and that's where a lot of teams get stuck. A simple but incredibly effective way to decide what to work on first is to plot each issue on an impact/effort matrix.

This framework helps you bucket every problem into one of four categories:

- High Impact, Low Effort (Quick Wins): These are your no-brainers. Jump on them immediately. A perfect example is renaming a button with a confusing label.

- High Impact, High Effort (Major Projects): These are the big-ticket items that need real planning, like redesigning a clunky onboarding flow.

- Low Impact, Low Effort (Fill-in Tasks): These are nice-to-haves you can slot in when developers have a bit of downtime.

- Low Impact, High Effort (Reconsider): These are the issues you should probably park for now. The return just isn't worth the investment.

By knocking out the "quick wins" first, you deliver immediate value to your users and build momentum for the bigger projects. This approach guarantees your development resources are always focused on the most meaningful improvements.

The mobile world is a minefield. One analysis of 1.3 million apps discovered that a shocking 14% had security flaws that exposed user data. By methodically testing and improving your app, you're not just smoothing out the user experience—you're also protecting it from critical vulnerabilities. You can dig deeper into these mobile app security challenges if you're curious.

Common Questions About App User Testing

Even the most buttoned-up testing plan runs into questions. That's totally normal. Getting ahead of the most common ones helps you avoid getting bogged down in "analysis paralysis" and keeps the project moving.

Here are the big questions I see come up time and time again.

How Many Users Do I Really Need?

This is the classic, million-dollar question. And the answer is almost always fewer than you think. You don't need to recruit a stadium full of people to find the most significant, show-stopping usability problems.

The Nielsen Norman Group famously showed that testing with just five users will reveal about 85% of the core usability issues. After the fifth person, you're mostly just hearing the same feedback on repeat, which means you're getting diminishing returns on your time and effort.

Now, if you're running a quantitative test—like an A/B test to see which design performs better—you'll need a bigger sample size. For that, you're often looking at 20 or more participants to get statistically meaningful data. But for uncovering why something isn't working, a small group is your secret weapon.

For most app teams, a great starting point is testing with 3-5 users for each of your main user personas. It's fast, budget-friendly, and gives you a clear, prioritized backlog of improvements.

What’s the Difference Between User Testing and QA?

It’s easy to mix these two up, but they play completely different roles. Think of it this way: they’re answering two very different, but equally important, questions about your app.

Quality Assurance (QA) testing is all about the technical side of things. QA testers are hunting for bugs, crashes, and performance glitches. They're trying to answer the question: “Did we build this thing correctly, according to the specs?”

User testing, on the other hand, is all about the human experience. It zeroes in on how intuitive and easy your app is to use. User testing answers a much more fundamental question: “Did we build the right thing that people actually want and can use?”

Here’s a simple breakdown:

| Aspect Compared | QA Testing | User Testing |

|---|---|---|

| Primary Goal | Find and log software bugs. | Uncover usability and design flaws. |

| Who Performs It | QA engineers and developers. | Real, representative end-users. |

| Focus | Technical functionality. | User experience and intuition. |

| Outcome | A bug-free application. | An intuitive, user-friendly app. |

Both are absolutely critical. A bug-free app that no one can figure out is just as useless as a beautiful app that crashes every five minutes.

How Much Should I Pay My Test Participants?

This is a big one. Paying people fairly is the key to getting good participants who provide thoughtful, honest feedback. Your payment shows that you respect their time and the value they're bringing to your project.

For a general audience in a remote test lasting 30 to 60 minutes, a good range is anywhere from $20 to $60. For most consumer apps, that’s plenty to attract people who are motivated and reliable.

But what if you’re testing an app for a highly specialized audience, like surgeons or financial traders? You’ll need to increase your incentive significantly to match their professional rates. For these niche roles, don't be surprised to see compensation go up to $100 per hour or even more.

The goal is to offer an amount that feels like a genuine "thank you" for their expertise, not a cheap bribe. Always be upfront about the payment amount and when they can expect to receive it. It builds trust right from the start.

Ready to turn insights into revenue? With Nuxie, you can design, target, and launch high-converting paywalls in minutes, no app updates required. Explore the Nuxie paywall studio today.